Hadoop - Hdfs Overview

Hadoop File System was developed using distributed file system design. It is run on commodity hardware. Unlike other distributed systems, HDFS is highly faulttolerant and designed using low-cost hardware.

HDFS holds very large amount of data and provides easier access. To store such huge data, the files are stored across multiple machines. These files are stored in redundant fashion to rescue the system from possible data losses in case of failure. HDFS also makes applications available to parallel processing.

Features of HDFS

- It is suitable for the distributed storage and processing.

- Hadoop provides a command interface to interact with HDFS.

- The built-in servers of namenode and datanode help users to easily check the status of cluster.

- Streaming access to file system data.

- HDFS provides file permissions and authentication.

HDFS Architecture

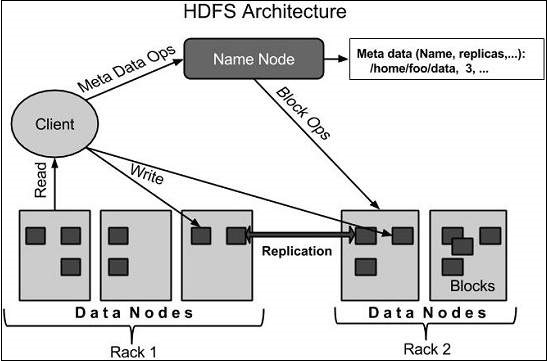

Given below is the architecture of a Hadoop File System.

HDFS follows the master-slave architecture and it has the following elements.

Namenode

The namenode is the commodity hardware that contains the GNU/Linux operating system and the namenode software. It is a software that can be run on commodity hardware. The system having the namenode acts as the master server and it does the following tasks:

- Manages the file system namespace.

- Regulates client’s access to files.

- It also executes file system operations such as renaming, closing, and opening files and directories.

Datanode

The datanode is a commodity hardware having the GNU/Linux operating system and datanode software. For every node (Commodity hardware/System) in a cluster, there will be a datanode. These nodes manage the data storage of their system.

- Datanodes perform read-write operations on the file systems, as per client request.

- They also perform operations such as block creation, deletion, and replication according to the instructions of the namenode.

Block

Generally the user data is stored in the files of HDFS. The file in a file system will be divided into one or more segments and/or stored in individual data nodes. These file segments are called as blocks. In other words, the minimum amount of data that HDFS can read or write is called a Block. The default block size is 64MB, but it can be increased as per the need to change in HDFS configuration.

Goals of HDFS

- Fault detection and recovery : Since HDFS includes a large number of commodity hardware, failure of components is frequent. Therefore HDFS should have mechanisms for quick and automatic fault detection and recovery.

- Huge datasets : HDFS should have hundreds of nodes per cluster to manage the applications having huge datasets.

- Hardware at data : A requested task can be done efficiently, when the computation takes place near the data. Especially where huge datasets are involved, it reduces the network traffic and increases the throughput.

Hadoop - Hdfs Operations

Starting HDFS

Initially you have to format the configured HDFS file system, open namenode (HDFS server), and execute the following command.

$ hadoop namenode -format

After formatting the HDFS, start the distributed file system. The following command will start the namenode as well as the data nodes as cluster.

$ start-dfs.sh

Listing Files in HDFS

After loading the information in the server, we can find the list of files in a directory, status of a file, using ‘ls’. Given below is the syntax of ls that you can pass to a directory or a filename as an argument.

$ $HADOOP_HOME/bin/hadoop fs -ls <args>

Inserting Data into HDFS

Assume we have data in the file called file.txt in the local system which is ought to be saved in the hdfs file system. Follow the steps given below to insert the required file in the Hadoop file system.

Step 1

You have to create an input directory.

$ $HADOOP_HOME/bin/hadoop fs -mkdir /user/input

Step 2

Transfer and store a data file from local systems to the Hadoop file system using the put command.

$ $HADOOP_HOME/bin/hadoop fs -put /home/file.txt /user/input

Step 3

You can verify the file using ls command.

$ $HADOOP_HOME/bin/hadoop fs -ls /user/input

Retrieving Data from HDFS

Assume we have a file in HDFS called outfile. Given below is a simple demonstration for retrieving the required file from the Hadoop file system.

Step 1

Initially, view the data from HDFS using cat command.

$ $HADOOP_HOME/bin/hadoop fs -cat /user/output/outfile

Step 2

Get the file from HDFS to the local file system using get command.

$ $HADOOP_HOME/bin/hadoop fs -get /user/output/ /home/hadoop_tp/

Shutting Down the HDFS

You can shut down the HDFS by using the following command.

$ stop-dfs.sh

Hadoop - Command Reference

HDFS Command Reference

There are many more commands in "$HADOOP_HOME/bin/hadoop fs"than are demonstrated here, although these basic operations will get you started. Running ./bin/hadoop dfs with no additional arguments will list all the commands that can be run with the FsShell system. Furthermore, $HADOOP_HOME/bin/hadoop fs -help commandName will display a short usage summary for the operation in question, if you are stuck.

A table of all the operations is shown below. The following conventions are used for parameters:

"<path>" means any file or directory name. "<path>..." means one or more file or directory names. "<file>" means any filename. "<src>" and "<dest>" are path names in a directed operation. "<localSrc>" and "<localDest>" are paths as above, but on the local file system.

All other files and path names refer to the objects inside HDFS.

| Command | Description |

|---|---|

| -ls <path> | Lists the contents of the directory specified by path, showing the names, permissions, owner, size and modification date for each entry. |

| -lsr <path> | Behaves like -ls, but recursively displays entries in all subdirectories of path. |

| -du <path> | Shows disk usage, in bytes, for all the files which match path; filenames are reported with the full HDFS protocol prefix. |

| -dus <path> | Like -du, but prints a summary of disk usage of all files/directories in the path. |

| -mv <src><dest> | Moves the file or directory indicated by src |

| to dest, within HDFS. | |

| -cp <src> <dest> | Copies the file or directory identified by src to dest, within HDFS. |

| -rm <path> | Removes the file or empty directory identified by path. |

| -rmr <path> | Removes the file or directory identified by path. Recursively deletes any child entries (i.e., files or subdirectories of path). |

| -put <localSrc> <dest> | Copies the file or directory from the local file system identified by localSrc to dest within the DFS. |

| -copyFromLocal <localSrc> <dest> | Identical to -put |

| -moveFromLocal <localSrc> <dest> | Copies the file or directory from the local file system identified by localSrc to dest within HDFS, and then deletes the local copy on success. |

| -get [-crc] <src> <localDest> | Copies the file or directory in HDFS identified by src to the local file system path identified by localDest. |

| -getmerge <src> <localDest> | Retrieves all files that match the path src in HDFS, and copies them to a single, merged file in the local file system identified by localDest. |

| -cat <filen-ame> | Displays the contents of filename on stdout. |

| -copyToLocal <src> <localDest> | Identical to -get |

| -moveToLocal <src> <localDest> | Works like -get, but deletes the HDFS copy on success. |

| -mkdir <path> | Creates a directory named path in HDFS. |

| Creates any parent directories in path that are missing (e.g., mkdir -p in Linux). | |

| -setrep [-R] [-w] rep <path> | Sets the target replication factor for files identified by path to rep. (The actual replication factor will move toward the target over time) |

| -touchz <path> | Creates a file at path containing the current time as a timestamp. Fails if a file already exists at path, unless the file is already size 0. |

| -test -[ezd] <path> | Returns 1 if path exists; has zero length; or is a directory or 0 otherwise. |

| -stat [format] <path> | Prints information about path. Format is a string which accepts file size in blocks (%b), filename (%n), block size (%o), replication (%r), and modification date (%y, %Y). |

| -tail [-f] <file2name> | Shows the last 1KB of file on stdout. |

| -chmod [-R] mode,mode,... <path>... | Changes the file permissions associated with one or more objects identified by path.... Performs changes recursively with R. mode is a 3-digit octal mode, or {augo}+/-{rwxX}. Assumes if no scope is specified and does not apply an umask. |

| -chown [-R] [owner][:[group]] <path>... | Sets the owning user and/or group for files or directories identified by path.... Sets owner recursively if -R is specified. |

| chgrp [-R] group <path>... | Sets the owning group for files or directories identified by path.... Sets group recursively if -R is specified. |

| -help <cmd-name> | Returns usage information for one of the commands listed above. You must omit the leading '-' character in cmd. |

No comments:

Post a Comment